Informing Parents with Perplexity and Python

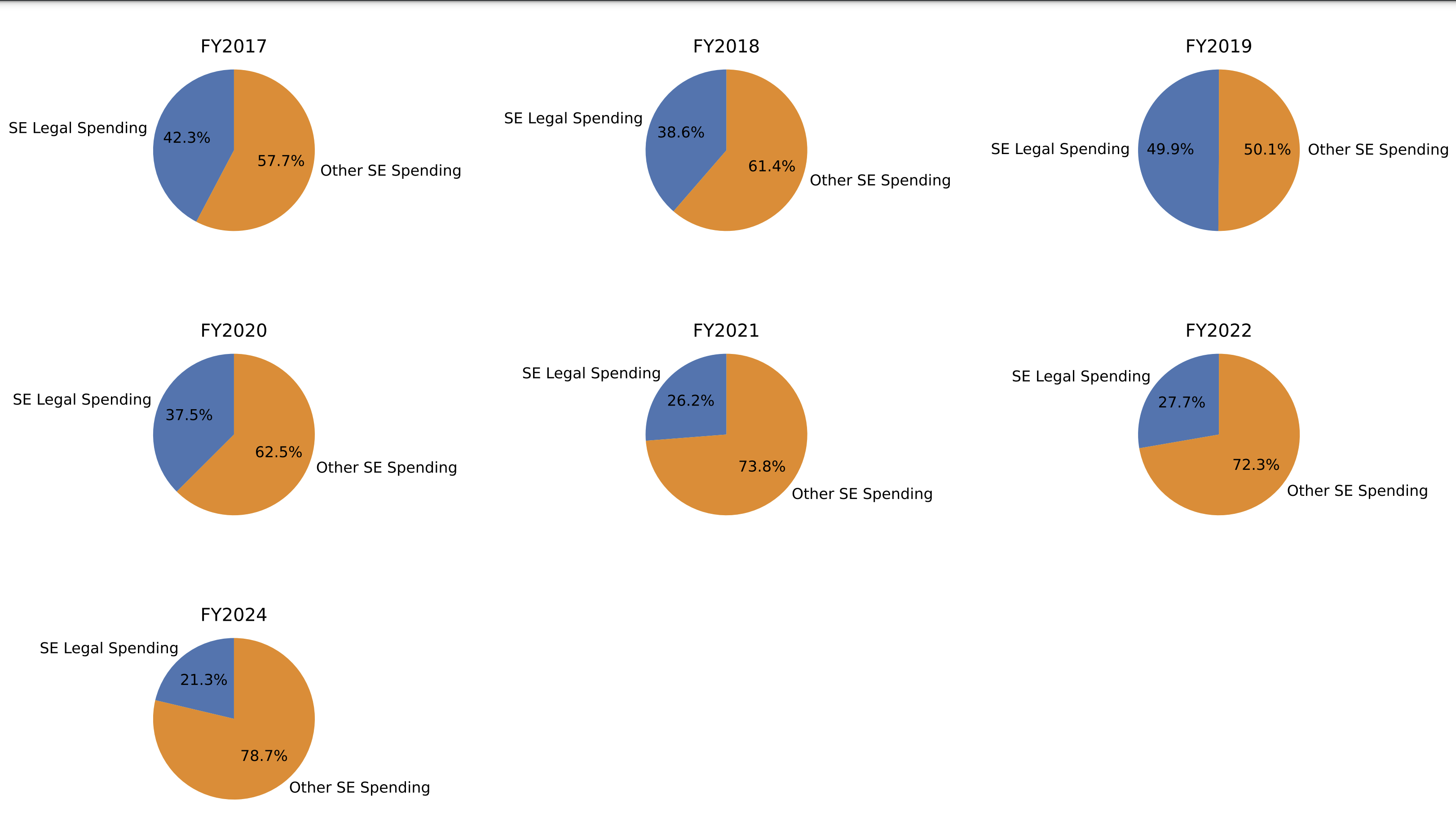

Whenever I try a new tool, I immediately look for a serious context of use. The marketing examples that vendors typically give are great for getting an idea of what the tool can do, but I really need to introduce it into my workflow. My latest project has been to create a report for parents to understand the mix of legal spending versus other spending in special education.

Due Process Costs

Aside from mediation, one mechanism for disputing items related to a child’s IEP is “due process.” Due process is a formal legal proceeding for parents to present evidence to dispute an IEP finding. Disputes can fall under categories like classroom placement, services requested, or an initial assessment to qualify for an IEP. If you want to learn more about the IEP process, I wrote an article on Medium a while back.

The process is meant to be unbiased and fair. This fairness may be the case on paper, but parents are usually at a disadvantage. Depending on the case’s complexity, parents typically need to hire a lawyer specializing in education law. The school district will also have a lawyer on their side and have the capability to “outspend” any individual. The costs can add up quickly, with the majority of the burden falling to the already stressed parents if they do not win the case.

I want to raise public awareness about the reality of due process and help parents stay informed. So, I created a report from publicly available data to inform parents of the spending. I used Python to gather and parse the data, with Perplexity AI as my research and coding partner.

Talking Through the Code

I don’t have a background in professional Python development. My background is in solution architecture and infrastructure design. I’m a practitioner turned product manager. So, while I have utilized some light scripting in the past, I am fairly new to Python. In this regard, Perplexity AI was there for me throughout the whole process.

Here is one of the questions in a long chain of questions answered.

Here’s a Python script that allows you to place a variable number of pie charts on a grid using Matplotlib:

import matplotlib.pyplot as plt

from matplotlib import gridspec

def create_pie_chart(data, labels, title):

"""

Function to create a pie chart and return the Axes object.

Args:

data (list): List of numerical values for the pie slices.

labels (list): List of labels for the pie slices.

title (str): Title for the pie chart.

Returns:

Axes object representing the pie chart.

"""

fig, ax = plt.subplots()

ax.pie(data, labels=labels, autopct='%1.1f%%')

ax.set_title(title)

return ax

# Get the number of pie charts from the user

num_charts = int(input("How many pie charts do you want to place on the grid? "))

# Create pie chart objects and store them in a list

pie_charts = []

for i in range(num_charts):

data = input(f"Enter the data for pie chart {i+1} (comma-separated): ").split(',')

data = [float(x) for x in data]

labels = input(f"Enter the labels for pie chart {i+1} (comma-separated): ").split(',')

title = input(f"Enter the title for pie chart {i+1}: ")

pie_charts.append(create_pie_chart(data, labels, title))

# Calculate the number of rows and columns for the grid

num_cols = int(num_charts ** 0.5)

num_rows = (num_charts + num_cols - 1) // num_cols

# Set up the figure and grid

fig = plt.figure(figsize=(10, 8))

gs = gridspec.GridSpec(num_rows, num_cols)

# Place the pie chart objects on the grid

for i, ax in enumerate(pie_charts):

row = i // num_cols

col = i % num_cols

ax_grid = fig.add_subplot(gs[row, col])

ax_grid.pie(ax.patches[0].get_facecolor(), labels=ax.texts[0].get_text(), autopct='%1.1f%%')

ax_grid.set_title(ax.title.get_text())

# Adjust spacing and display the figure

plt.tight_layout()

plt.show()

Explanation:

- We define a function

create_pie_chartthat takes the data, labels, and title as arguments, creates a pie chart usingplt.subplots()andax.pie(), and returns theAxesobject representing the pie chart.- We prompt the user to enter the number of pie charts they want to place on the grid using

input().- We iterate

num_chartstimes and prompt the user to enter the data, labels, and title for each pie chart. We create pie chart objects by calling thecreate_pie_chartfunction with the user-provided data, labels, and title, and store the returnedAxesobjects in a list calledpie_charts.- We calculate the number of rows and columns for the grid based on the number of pie charts. We use the square root of

num_chartsas the number of columns and calculate the number of rows accordingly.- We set up a new

Figureobjectfigand create aGridSpecobjectgswith the calculated number of rows and columns.- We iterate over the

pie_chartslist usingenumerateto get both the indexiand the pie chart objectax.- For each iteration, we calculate the row and column positions in the grid using

row = i // num_colsandcol = i % num_cols.- We create a new

Axesobjectax_gridand add it to the grid usingfig.add_subplot(gs[row, col]).- To recreate the pie chart on the new

Axesobject, we access the data and labels from the original pie chart object using:

ax.patches.get_facecolor()to get the data (colors of the pie slices)ax.texts.get_text()to get the labels We pass these to thepie()method of the newAxesobject.

- We set the title of the new

Axesobject usingax.title.get_text()to retrieve the title from the original pie chart object.- Finally, we adjust the spacing between subplots using

plt.tight_layout()and display the figure usingplt.show().This script allows the user to specify the number of pie charts they want to place on the grid and provides a flexible way to input the data, labels, and title for each pie chart. The pie charts are then dynamically placed on a grid based on the number of charts specified.

Analyzing the Code In Context

In a few hours, I had a complete solution to create my pdf report. However, I suspect the code was not optimized, as it took a minute or two to run. I decided to try Perplexity AI again. This time, I uploaded my code and the output of cProfile (which I wouldn’t have known about had it not been for Perplexity AI) and asked for an analysis. The result blew my mind. Based on the output of the cProfile I ran on my code, Perplexity AI was able to suggest some areas for improvement.

The cProfile output provides detailed timing information for the various functions and methods called during the execution of the code. Here are some key observations and potential areas for optimization:

- Data I/O and parsing:

- A significant amount of time (around 9.8 seconds) is spent in

readers.py:854(read_csv)andcommon.py:645(get_handle), which are related to reading CSV data from URLs.- Optimizing the data ingestion process, such as using more efficient libraries or caching the data locally, could potentially improve performance.

- Matplotlib rendering:

- Several functions related to Matplotlib rendering, such as

table.py:417(_get_grid_bbox),backend_agg.py:452(_print_pil), andbackend_agg.py:462(print_png), consume a considerable amount of time.- Optimizing the rendering process, such as reducing the number of plots or using a more lightweight backend, could potentially improve performance.

- Numerical operations:

- Functions like

formatters.py:331(__call__)andformatters.py:220(catch_format_error), which are likely related to formatting numerical data, take a noticeable amount of time.- Optimizing these operations or using more efficient libraries like NumPy could potentially improve performance.

- String operations:

- Functions like

object_array.py:141(_str_replace)andobject_array.py:427(_str_strip), which are related to string operations, consume a significant amount of time.- Optimizing these operations or using more efficient string manipulation techniques could potentially improve performance.

- HTTP requests:

- Functions like

request.py:1307(do_open),request.py:529(_open), andclient.py:278(_read_status)are related to making HTTP requests and handling responses.- Optimizing the HTTP request process, such as using a more efficient library or implementing caching mechanisms, could potentially improve performance.

- Axis and tick formatting:

- Functions like

axis.py:1544(_get_tick)andaxis.py:2141(_get_ticks_position)are related to formatting axes and ticks in Matplotlib plots.- Optimizing these operations or reducing the number of ticks could potentially improve performance.

- Profiling overhead:

- The profiling process itself introduces some overhead, as evidenced by the time spent in functions like

cProfile.run()andpstats.Stats().sort_stats().- While this overhead is relatively small, it’s important to consider it when interpreting the profiling results.

It’s important to note that the profiling results can vary depending on the input data and the specific operations performed. Additionally, some of the time spent in certain functions may be due to external dependencies or libraries, which may require further investigation or optimization. To optimize the code effectively, it’s recommended to focus on the most time-consuming functions and operations, and consider alternative approaches, libraries, or algorithms that can potentially improve performance. Additionally, profiling with different input data sets and configurations can provide further insights into potential bottlenecks.

Follow the entire thread. What I could uncover (again, I’m a Python novice) was wild.

I’m enjoying my time with Perplexity AI. It has quickly earned a spot in my daily toolkit alongside apps like Obsidian. More importantly, it helps me promptly finish the projects I deeply care about.

You can follow my other writing and connect on social media by exploring the links at harleystagner.me